This chapter covers common models for count data: bernoulli models, aggregated binomial models, Poisson and gamma-Poisson models, and multinomial/categorical models.

Exercises

11E1

If an event has probability 0.35, then the log-odds of the event are \[\mathrm{logit}\ 0.35 = \log \frac{0.35}{1 - 0.35} \approx -0.62.\]

11E2

If an event has log-odds 3.2, the probability of the event is \[\mathrm{logit}^{-1}\ 3.2 = \frac{\exp 3.2}{1 + \exp3.2} \approx 0.96\]

11E3

If the coefficient in a logistic regression has value 1.7, this implies that the log-odds of the outcome increase by 1.7 for every one-unit change in the predictor associated with that coefficient; or that the odds of the outcome are multiplied by \(\exp 1.7 \approx 5.47\) for every one-unit change in the predictor.

11E4

Poisson regressions require the use of an offset when different outcome observations are associated with different exposure times; or in other words, when you want to model rates instead of counts (of course when we model counts without an offset, we are also modeling rates but all the denominators are constant). For example, if you monitor the number of positive influenza diagnostic tests at multiple clinics, you need to use an offset that models the total number of flu tests performed at each clinic, or at least the total number of visitors. (We could also model this as a binomial outcome, but that is true for a lot of Poisson problems.)

11M1

When we reorganize data from the disaggregated to the aggregated binomial form, the likelihood changes because we also account for the number of potential ways to get the same aggregate outcome. The disaggregated model only considers the outcome for the specific order of event occurrences that we observed, while the aggregated model gives the likelihood for any sample with the same number of cases, which could have potentially arisen in many ways.

That means we should use the aggregated binomial form only when we truly know that any samples with the same number of cases is identical – for example, if our experimental units are individuals and we know which individuals experienced an outcome, these data would be disaggregate because we could potentially have other information that explains differences between individuals.

11M2

If a coefficient in a Poisson regression has value 1.7, assuming the typical log link function, that implies that a one unit change in the associated predictor leads to a \(\exp 1.7 \approx 5.47\) -fold change in the outcome; or an equivalent \(5.47\) -fold change in the rate of outcome occurrence.

11M3

The logit link is typically appropriate for a binomial GLM because we apply a linear model to the parameter \(p\) , which is a probability. The logit link is a function that maps \((0, 1) \to \mathbb{R}\) , and all probabilities have to be in the interval \([0, 1]\) . So the logit link ensures that the real-valued linear predictor is mapped to the allowed domain of the parameter \(p\) .

11M4

The log link is typically appropriate for a Poisson GLM because we apply a linear model to the only parametr \(\lambda\) , which only has support on \((0, \infty)\) . The log link function maps the input from \((0, \infty) \to \mathbb{R}\) , so \(\log \lambda\) and the linear predictor have the same allowed values.

11M5

Using a logit link for the mean of a Poisson GLM would imply that \(\lambda \in (0, 1)\) is a necessary constraint for the problem. When \(\lambda < 1\) , the highest probility value for the Poisson distribution is 0, so we would want to do this on data where we know a priori that \(0\) is the most common outcome, but larger numbers can happen.

11M6

The binomial distribution has maximum entropy when the data have two possible values and the expected value is constant (i.e., when the probability of each outcome is constant). Since the Poisson distribution is a special limiting case of the binomial distribution, it must have the same maximum entropy conditions.

11M7

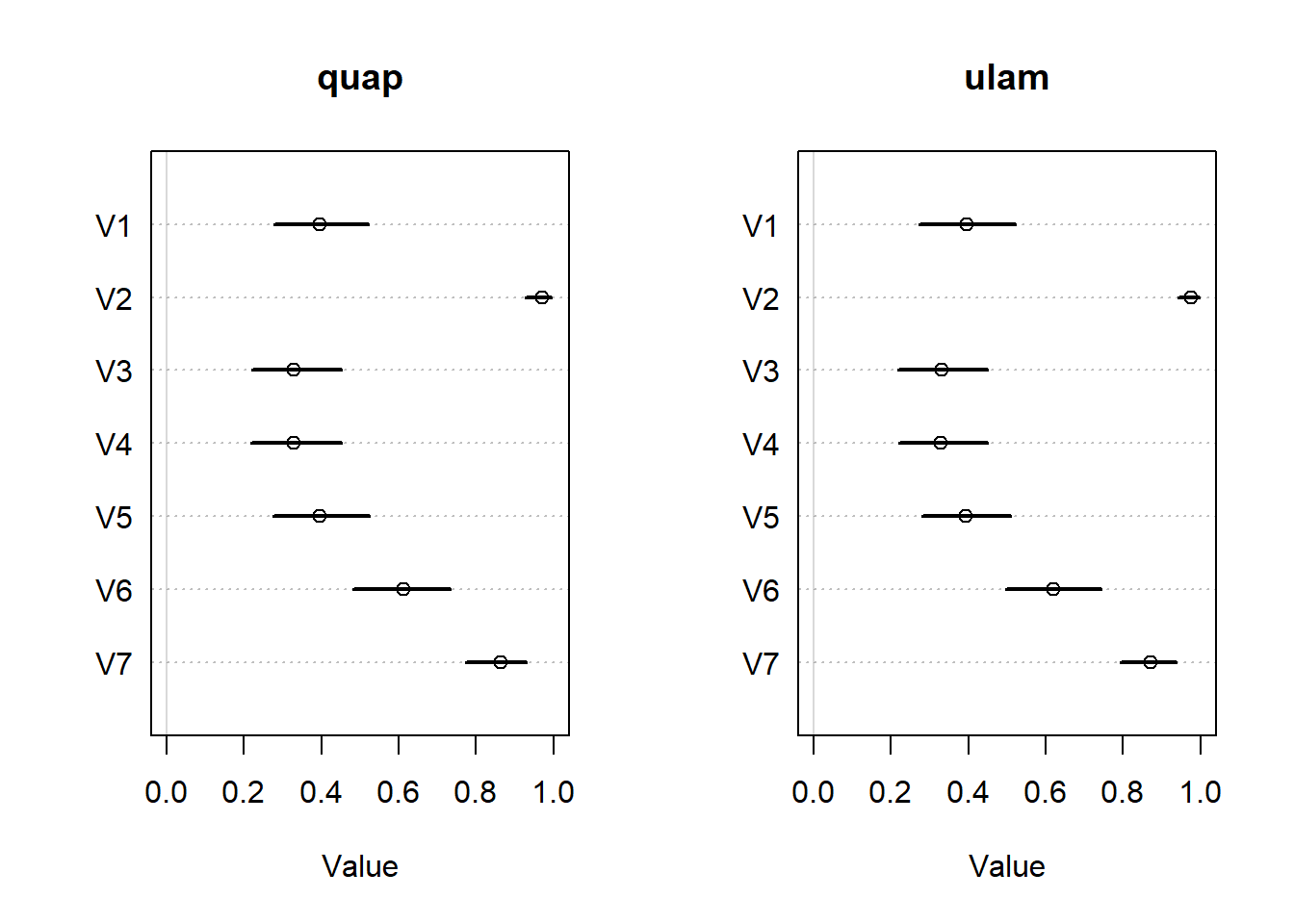

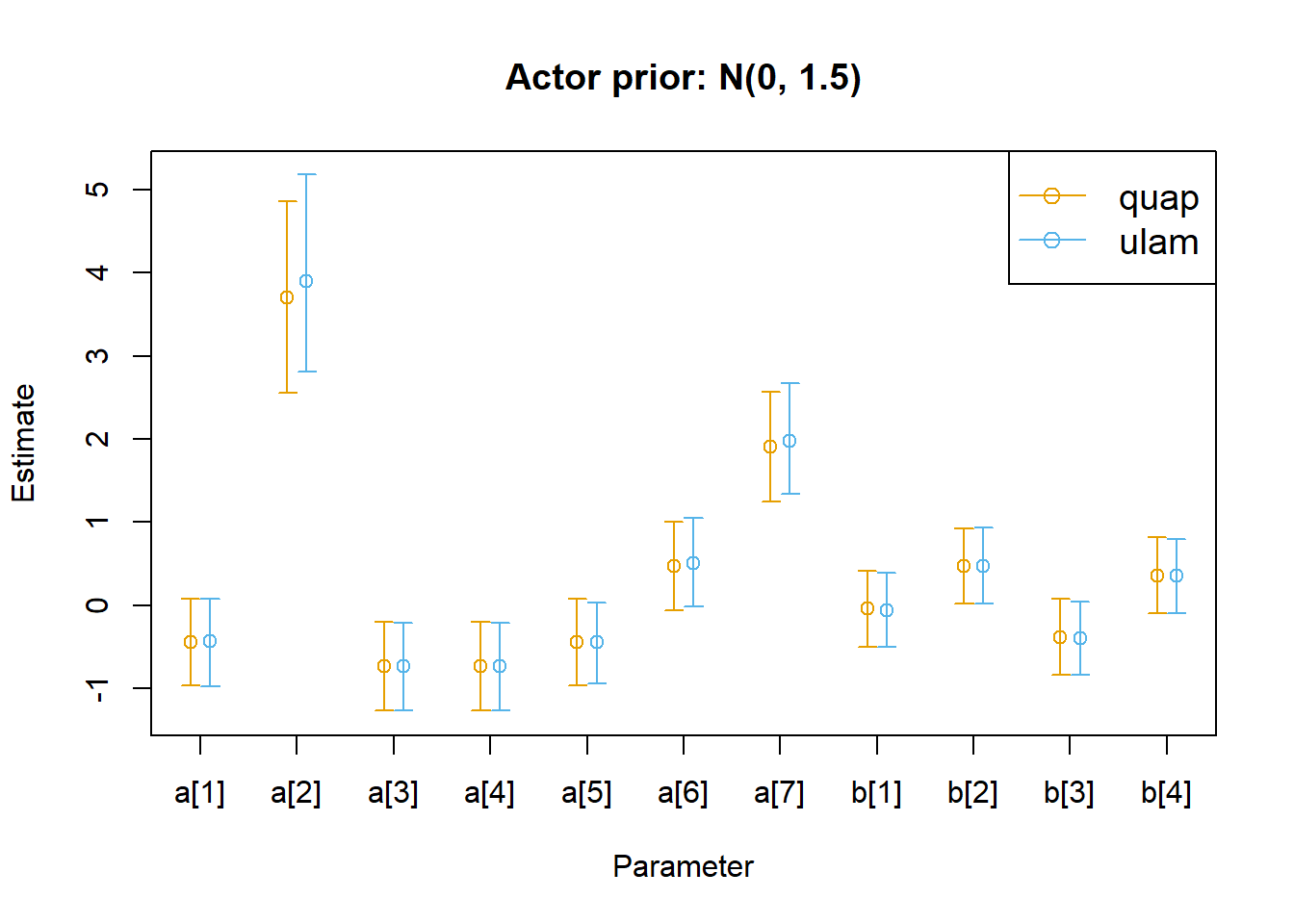

We’ll recreate the chimpanzee model using both quap (quadratic approximation) and ulam (HMC) to compare the differences between the fitting methods.

data (chimpanzees, package = "rethinking" )<- chimpanzees$ treatment <- 1 + d$ prosoc_left + 2 * d$ condition<- list (pulled_left = d$ pulled_left,actor = d$ actor,treatment = as.integer (d$ treatment)<- alist (~ dbinom (1 , p),logit (p) <- a[actor] + b[treatment],~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 ).4 _quap <- rethinking:: quap (flist = chimp_model,data = dat_list.4 _ulam <- rethinking:: ulam (flist = chimp_model,data = dat_list,chains = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c144d30df.hpp:492: note: by 'ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model::write_array'

492 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c144d30df.hpp:492: note: by 'ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model::write_array'

492 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 sequential chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 1.3 seconds.

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 finished in 1.4 seconds.

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 finished in 1.4 seconds.

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 finished in 1.3 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.3 seconds.

Total execution time: 5.8 seconds.

And we’ll compare the predictions of the actor effects between both models.

<- rethinking:: extract.samples (m11.4 _quap)<- rethinking:: extract.samples (m11.4 _ulam)<- rethinking:: inv_logit (post_quap$ a)<- rethinking:: inv_logit (post_ulam$ a)layout (matrix (1 : 2 , nrow = 1 ))plot (:: precis (as.data.frame (pl_quap)),xlim = c (0 , 1 ),main = "quap" plot (:: precis (as.data.frame (pl_ulam)),xlim = c (0 , 1 ),main = "ulam"

OK, those posteriors look exactly the same, so we better check the next posterior plot that’s shown in the chapter.

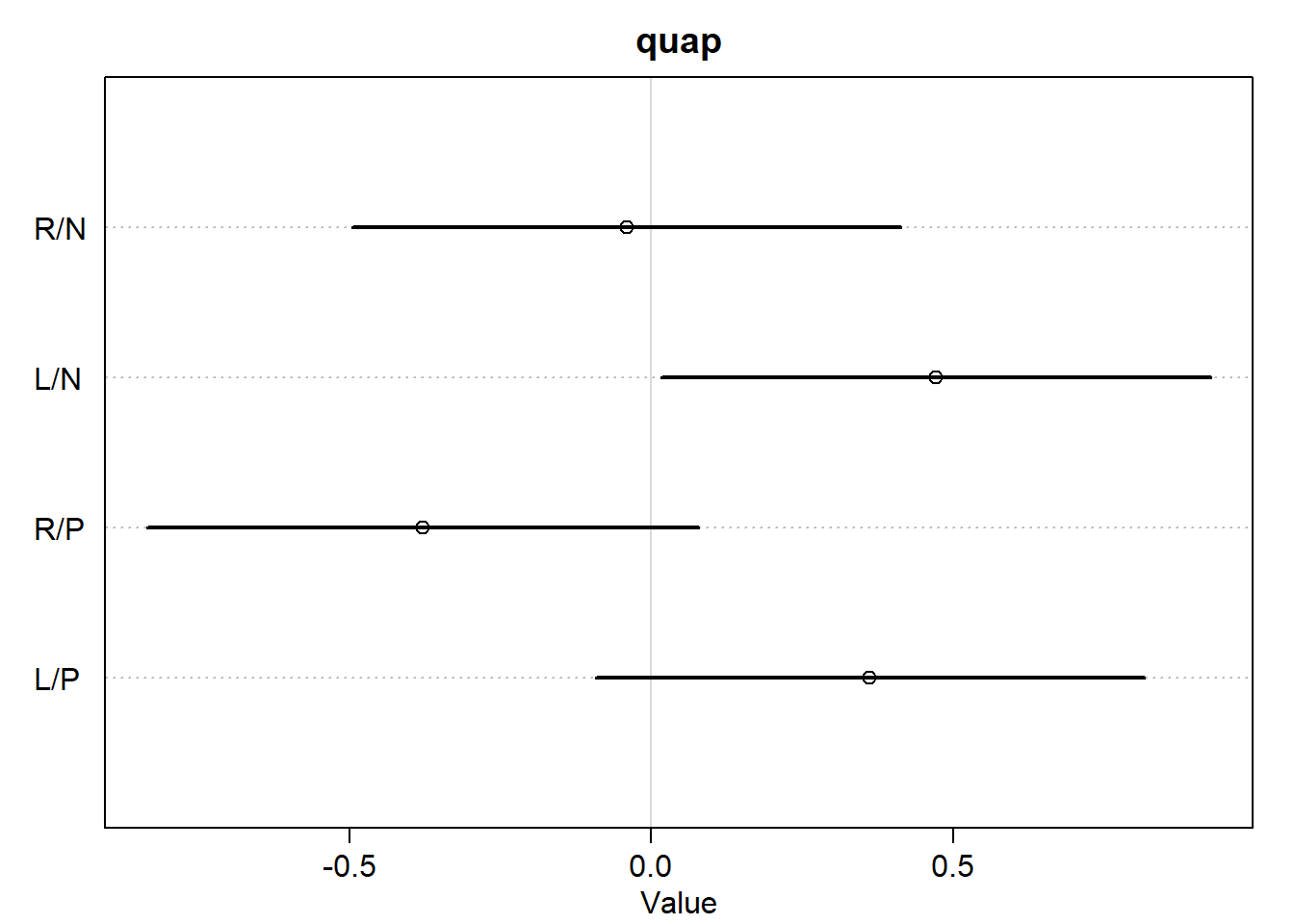

<- c ("R/N" , "L/N" , "R/P" , "L/P" )plot (:: precis (m11.4 _quap, depth = 2 , pars = "b" ),labels = labs,main = "quap" plot (:: precis (m11.4 _ulam, depth = 2 , pars = "b" ),labels = labs,main = "ulam"

OK, there are a few tiny differences, but the two models still look almost exactly the same for practical purposes. Let’s make the contrast plot just to be safe.

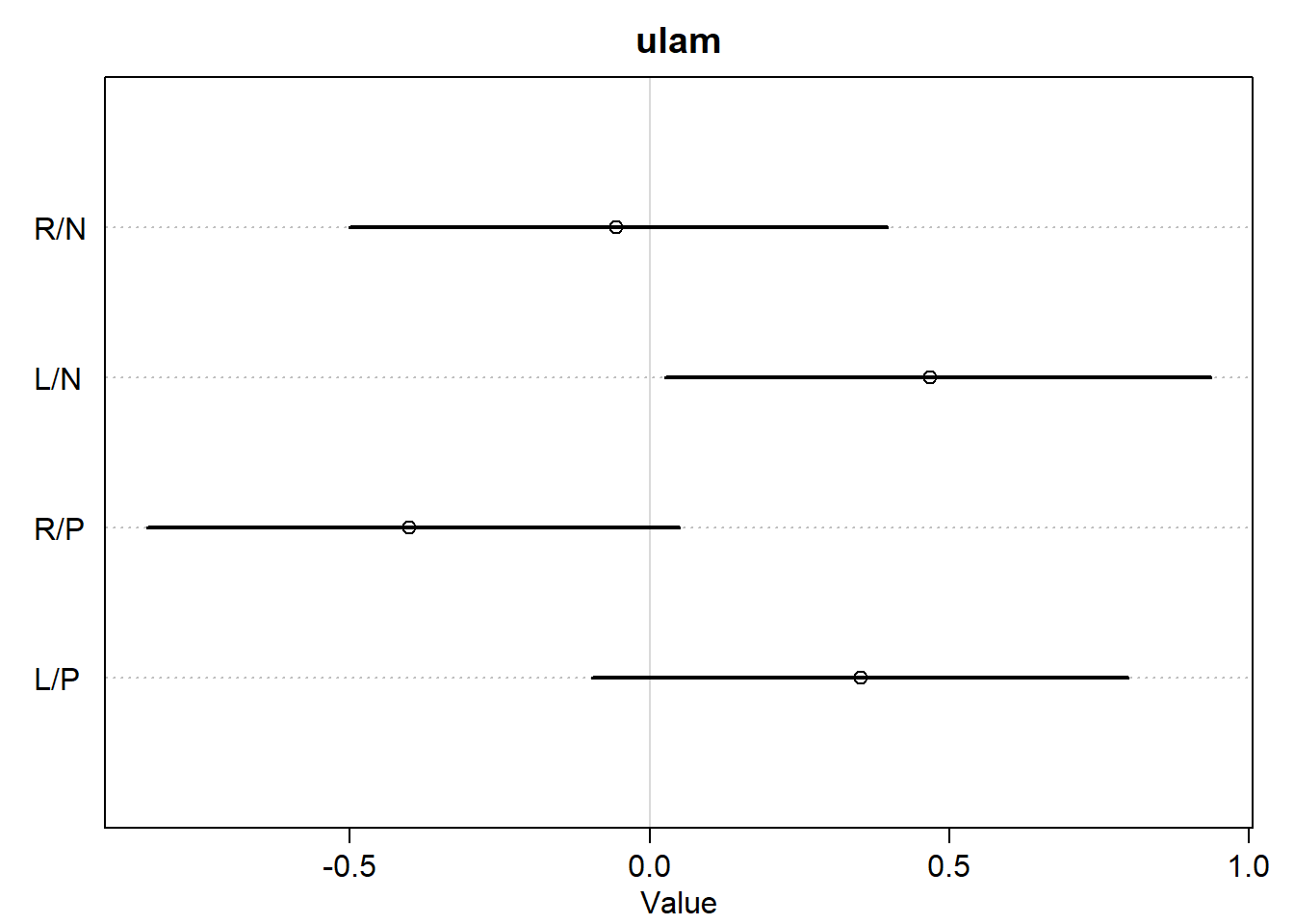

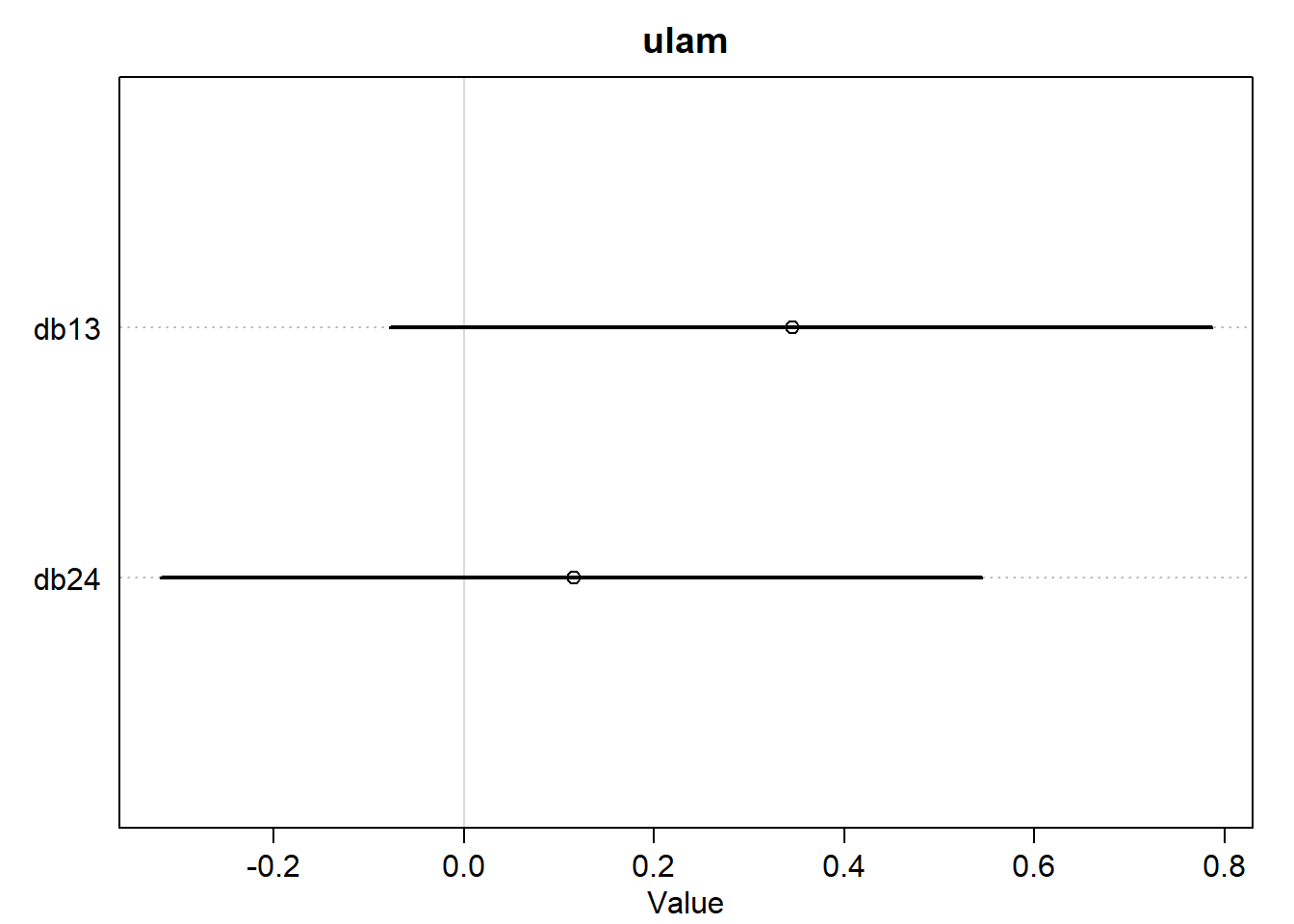

<- list (db13 = post_quap$ b[,1 ] - post_quap$ b[,3 ],db24 = post_quap$ b[,2 ] - post_quap$ b[,4 ]<- list (db13 = post_ulam$ b[,1 ] - post_ulam$ b[,3 ],db24 = post_ulam$ b[,2 ] - post_ulam$ b[,4 ]plot (:: precis (diffs_quap),main = "quap" plot (:: precis (diffs_ulam),main = "ulam"

OK, those also look exactly the same. The way the question is wording is sort of leading and I was expecting to see a difference. But I guess we better just plot the exact parameter estimates.

Show plot code (long-ish)

<- rethinking:: precis (m11.4 _quap, depth = 2 ) |> as.data.frame ()<- rethinking:: precis (m11.4 _ulam, depth = 2 ) |> as.data.frame ()<- nrow (parms_quap)<- c (0.9 , n + 0.1 )<- c (min (min (parms_quap$ ` 5.5% ` , parms_ulam$ ` 5.5% ` )),max (max (parms_quap$ ` 94.5% ` , parms_ulam$ ` 94.5% ` ))|> round (1 )<- 0.1 # dodge factor # Plot setup layout (1 )plot (NULL , NULL ,xlim = xrange,ylim = yrange,xlab = "Parameter" ,ylab = "Estimate" ,xaxt = "n" ,main = "Actor prior: N(0, 1.5)" axis (1 , at = 1 : n, labels = rownames (parms_quap))legend ("topright" ,legend = c ("quap" , "ulam" ),col = c ("#e69f00" , "#56b4e9" ),pch = 1 ,lty = 1 ,cex = 1.2 # quap estimates points (x = 1 : n - d,y = parms_quap$ mean,col = "#e69f00" arrows (x0 = 1 : n - d,,y0 = parms_quap$ ` 5.5% ` ,x = 1 : n - d,y = parms_quap$ ` 94.5% ` ,length = 0.05 ,angle = 90 ,code = 3 ,col = "#e69f00" # ulam estimates points (x = 1 : n + d,y = parms_ulam$ mean,col = "#56b4e9" arrows (x0 = 1 : n + d,,y0 = parms_ulam$ ` 5.5% ` ,x = 1 : n + d,y = parms_ulam$ ` 94.5% ` ,length = 0.05 ,angle = 90 ,code = 3 ,col = "#56b4e9"

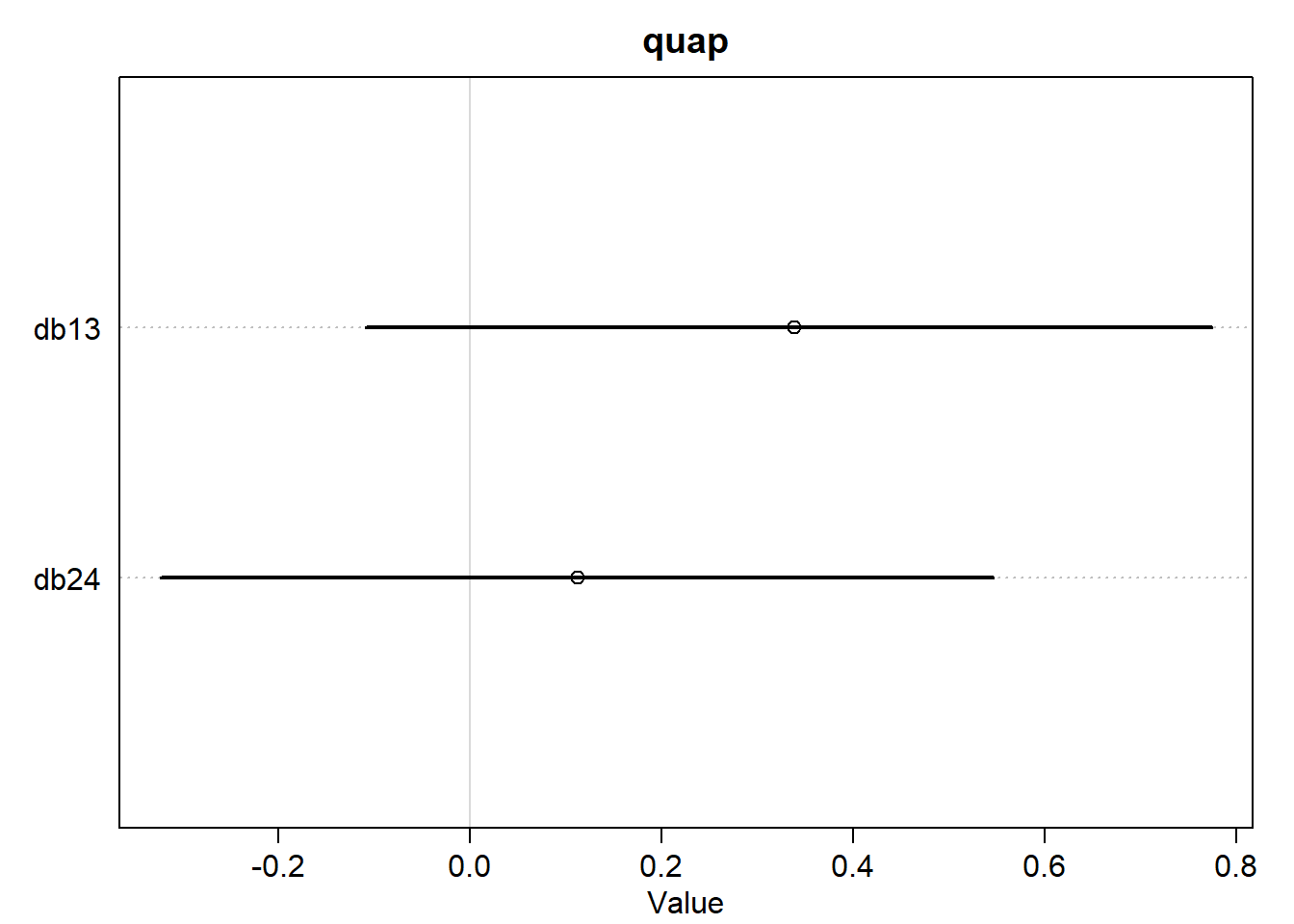

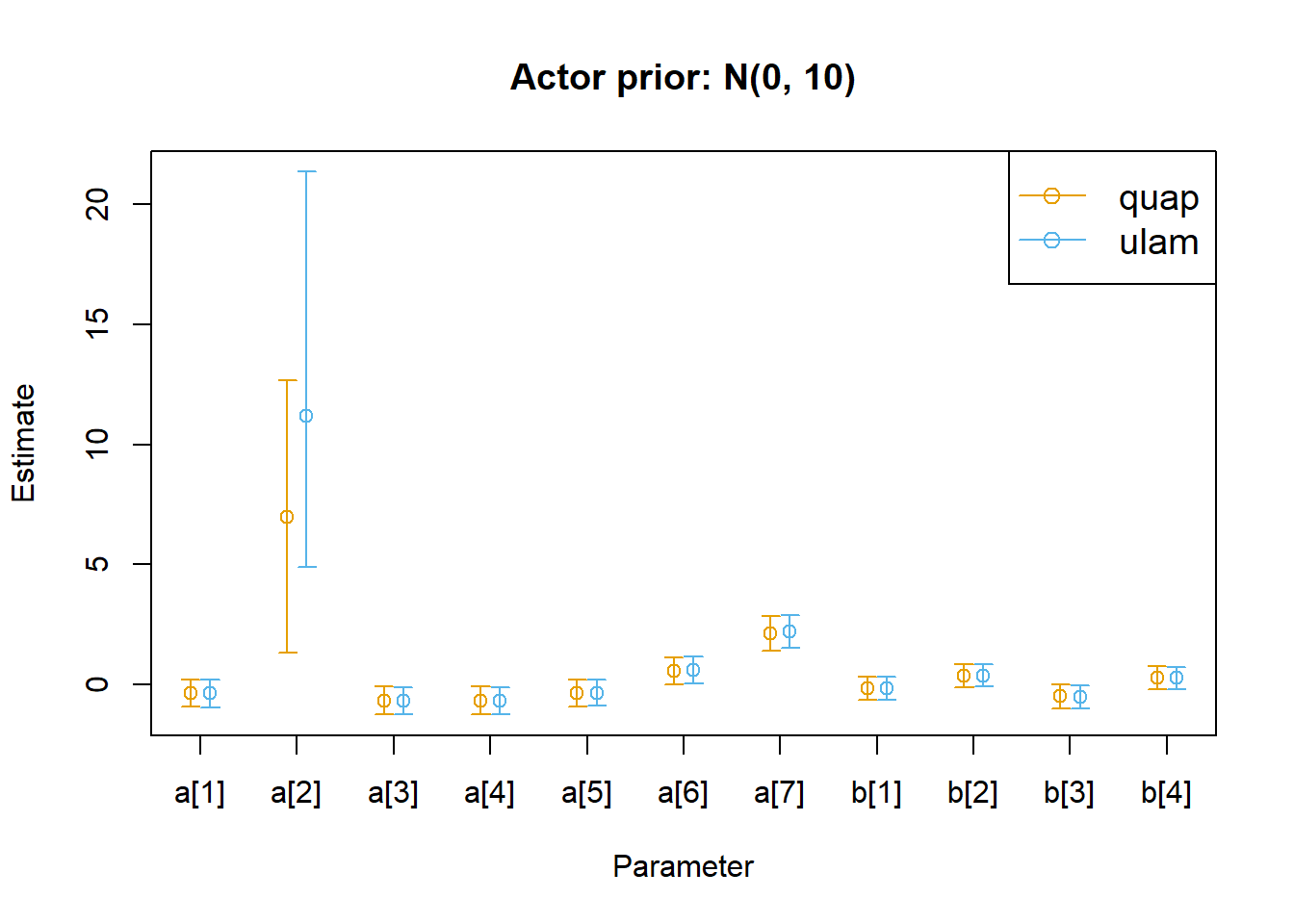

Yeah, the parameter estimates are almost all identical. Maybe that was the point, let’s try relaxing the priors on the actor intercepts to see if that changes the estimates.

<- alist (~ dbinom (1 , p),logit (p) <- a[actor] + b[treatment],~ dnorm (0 , 10 ),~ dnorm (0 , 0.5 ).4 _r_quap <- rethinking:: quap (flist = chimp_model_r,data = dat_list.4 _r_ulam <- rethinking:: ulam (flist = chimp_model_r,data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model_namespace::ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c2c305688.hpp:492: note: by 'ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model_namespace::ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model::write_array'

492 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model_namespace::ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c2c305688.hpp:492: note: by 'ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model_namespace::ulam_cmdstanr_6d6480bb4a0ed7b052bc83ca092e6e10_model::write_array'

492 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 finished in 1.9 seconds.

Chain 2 finished in 1.8 seconds.

Chain 3 finished in 1.8 seconds.

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 finished in 2.1 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.9 seconds.

Total execution time: 2.2 seconds.

This time we’ll go straight to the parameters plot to see if there are any differences between the model fits.

Show plot code (long-ish)

<- rethinking:: precis (m11.4 _r_quap, depth = 2 ) |> as.data.frame ()<- rethinking:: precis (m11.4 _r_ulam, depth = 2 ) |> as.data.frame ()<- nrow (parms_r_quap)<- c (0.9 , n + 0.1 )<- c (min (min (parms_r_quap$ ` 5.5% ` , parms_r_ulam$ ` 5.5% ` )),max (max (parms_r_quap$ ` 94.5% ` , parms_r_ulam$ ` 94.5% ` ))|> round (1 )<- 0.1 # dodge factor # Plot setup layout (1 )plot (NULL , NULL ,xlim = xrange,ylim = yrange,xlab = "Parameter" ,ylab = "Estimate" ,xaxt = "n" ,main = "Actor prior: N(0, 10)" axis (1 , at = 1 : n, labels = rownames (parms_r_quap))legend ("topright" ,legend = c ("quap" , "ulam" ),col = c ("#e69f00" , "#56b4e9" ),pch = 1 ,lty = 1 ,cex = 1.2 # quap estimates points (x = 1 : n - d,y = parms_r_quap$ mean,col = "#e69f00" arrows (x0 = 1 : n - d,,y0 = parms_r_quap$ ` 5.5% ` ,x = 1 : n - d,y = parms_r_quap$ ` 94.5% ` ,length = 0.05 ,angle = 90 ,code = 3 ,col = "#e69f00" # ulam estimates points (x = 1 : n + d,y = parms_r_ulam$ mean,col = "#56b4e9" arrows (x0 = 1 : n + d,,y0 = parms_r_ulam$ ` 5.5% ` ,x = 1 : n + d,y = parms_r_ulam$ ` 94.5% ` ,length = 0.05 ,angle = 90 ,code = 3 ,col = "#56b4e9"

In this case, the two algorithms still give us really similar results, but we lose the regularizing effect of our priors on the outlier case for actor 2. Our priors now presuppose a wider range of effect sizes, and actor 2 pulled the same lever in every case. So we have no data to pull the effect estimate away from the wide prior for this actor, whereas our previous weakly informative prior was providing a regularizing effect on the allowable values for this actor. But there’s no real difference between the quap and ulam fitted parameters.

I checked the solutions manual to see if maybe older versions of quap() behaved differently, but Richard McElreath seems to just have a different interpretation of “different” parameter values to me, as he interprets the values for actor 2 being different in the original model, and then being more different in the relaxed prior model. While I agree that there are numerical differences, I would argue that they aren’t meaningful in any substantial way, especially for the first model.

11M8

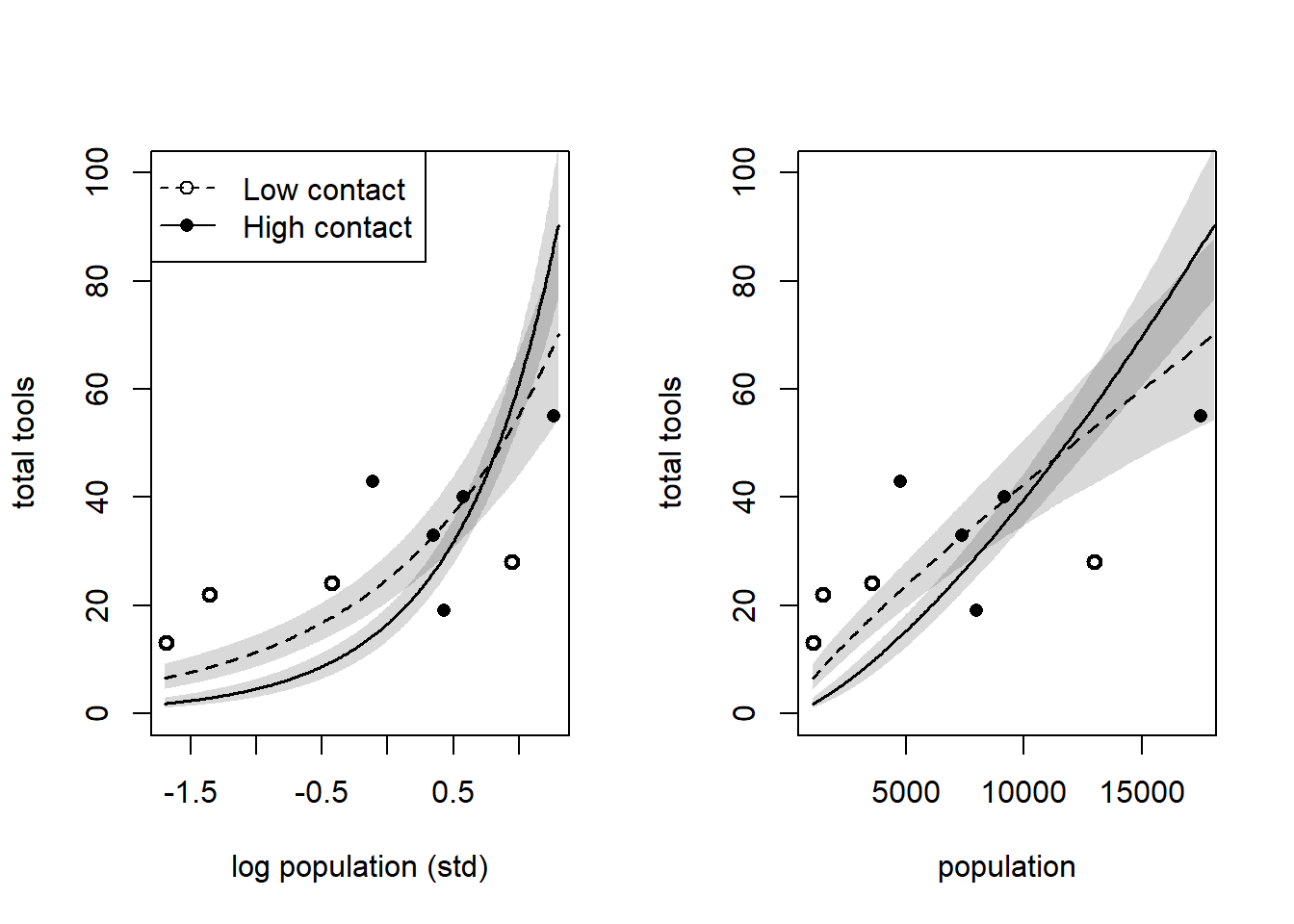

For this exercise, we’ll refit the island tools model but exclude Hawaii from the sample.

data (Kline, package = "rethinking" )<- Kline[Kline$ culture != "Hawaii" , ]$ P <- scale (log (d$ population))$ contact_id <- ifelse (d$ contact == "high" , 2 , 1 )<- list (T = d$ total_tools,P = d$ P,cid = d$ contact_id<- rethinking:: ulam (alist (~ dpois (lambda),log (lambda) <- a[cid] + b[cid]* P,~ dnorm (3 , 0.5 ),~ dnorm (3 , 0.2 )data = dat,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model_namespace::ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c1a9a1953.hpp:505: note: by 'ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model_namespace::ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model::write_array'

505 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model_namespace::ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c1a9a1953.hpp:505: note: by 'ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model_namespace::ulam_cmdstanr_a7bafce69a312f89c664fe4c97927155_model::write_array'

505 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 0.1 seconds.

Chain 2 finished in 0.1 seconds.

Chain 3 finished in 0.0 seconds.

Chain 4 finished in 0.0 seconds.

All 4 chains finished successfully.

Mean chain execution time: 0.1 seconds.

Total execution time: 0.3 seconds.

Let’s get the predicts and make a plot.

Show code (long)

layout (matrix (1 : 2 , nrow = 1 ))plot ($ P, dat$ T,xlab = "log population (std)" ,ylab = "total tools" ,pch = ifelse (dat$ cid == 1 , 1 , 16 ),lwd = 2 ,ylim = c (0 , 100 )<- seq (from = - 1.7 , to = 1.3 , by = 0.01 )# Predictions for cid = 1 <- rethinking:: link (m_11m8, data = data.frame (P = P_seq, cid = 1 ))<- apply (lambda, 2 , mean)<- apply (lambda, 2 , rethinking:: PI)lines (P_seq, lmu, lty = 2 , lwd = 1.5 ):: shade (lci, P_seq, xpd = TRUE )# Predictions for cid = 2 <- rethinking:: link (m_11m8, data = data.frame (P = P_seq, cid = 2 ))<- apply (lambda, 2 , mean)<- apply (lambda, 2 , rethinking:: PI)lines (P_seq, lmu, lty = 1 , lwd = 1.5 ):: shade (lci, P_seq, xpd = TRUE )legend ("topleft" ,pch = c (1 , 16 ),lty = c (2 , 1 ),legend = c ("Low contact" , "High contact" )# Natural scale population plot plot ($ population, d$ total_tools,xlab = "population" , ylab = "total tools" ,pch = ifelse (dat$ cid == 1 , 1 , 16 ),lwd = 2 ,ylim = c (0 , 100 )<- exp (P_seq * 0.94 + 8.58 )# Predictions for cid = 1 <- rethinking:: link (m_11m8, data = data.frame (P = P_seq, cid = 1 ))<- apply (lambda, 2 , mean)<- apply (lambda, 2 , rethinking:: PI)lines (pop_seq, lmu, lty = 2 , lwd = 1.5 ):: shade (lci, pop_seq, xpd = TRUE )# Predictions for cid = 2 <- rethinking:: link (m_11m8, data = data.frame (P = P_seq, cid = 2 ))<- apply (lambda, 2 , mean)<- apply (lambda, 2 , rethinking:: PI)lines (pop_seq, lmu, lty = 1 , lwd = 1.5 ):: shade (lci, pop_seq, xpd = TRUE )

Interestingly, without Hawaii in the model, the model now predicts that the number of tools will grow faster, and we don’t see diminishing returns of population as strongly as we do in the model with Hawaii. We still see the crossover effect though, indicating that the model predicts that past a certain population threshold, the rate of acquiring tools increases faster for high contact populations than for low contact populations. Interestingly, our model without Hawaii also implies that high contact populations acquire tools slower than low contact populations for low population sizes, which is the opposite of what theory and the model with Hawaii told us. But that’s likely because the low-contact populations all have smaller population sizes other than Hawaii and Manus, while there are no comparable high-contact populations with the smallest population sizes.

So Hawaii is influential on our model, but that seems to be a good thing – as Hawaii has a population an order of magnitude higher than Tonga (the culture with the second largest population) but only 20 more tools than Tonga, it helps to limit the model estimation of the number of tools acquired as the population grows.

11H1

For this problem, we want to compare the simpler chimpanzee lever models to the model with unique actor intercepts.

First I have to do the data processing again, cause I overwrote the data d in the Kline data problem, which made me really think I should use unique variable names instead of just copying d from the textbook. But I’m going to do that again. Then we’ll fit the three models to compare (m11.2 and m11.3 are the same other than the choice of priors for the treatment effect).

data (chimpanzees, package = "rethinking" )<- chimpanzees$ treatment <- 1 + d$ prosoc_left + 2 * d$ condition<- list (pulled_left = d$ pulled_left,actor = d$ actor,treatment = as.integer (d$ treatment).1 <- rethinking:: ulam (flist = alist (~ dbinom (1 , p),logit (p) <- a,~ dnorm (0 , 10 )data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model_namespace::ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c6c181b15.hpp:366: note: by 'ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model_namespace::ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model::write_array'

366 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model_namespace::ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c6c181b15.hpp:366: note: by 'ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model_namespace::ulam_cmdstanr_6381f795dc1f3858984a57023b55cf39_model::write_array'

366 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 finished in 0.5 seconds.

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 finished in 0.5 seconds.

Chain 3 finished in 0.5 seconds.

Chain 4 finished in 0.5 seconds.

All 4 chains finished successfully.

Mean chain execution time: 0.5 seconds.

Total execution time: 0.7 seconds.

.3 <- rethinking:: ulam (flist = alist (~ dbinom (1 , p),logit (p) <- a + b[treatment],~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 )data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model_namespace::ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c232e4fd6.hpp:461: note: by 'ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model_namespace::ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model::write_array'

461 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model_namespace::ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c232e4fd6.hpp:461: note: by 'ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model_namespace::ulam_cmdstanr_eae524465d9a8f4b995df3f74d53e0a0_model::write_array'

461 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 1.7 seconds.

Chain 2 finished in 1.7 seconds.

Chain 4 finished in 1.6 seconds.

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 finished in 1.7 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.7 seconds.

Total execution time: 1.9 seconds.

.4 <- rethinking:: ulam (flist = alist (~ dbinom (1 , p),logit (p) <- a[actor] + b[treatment],~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 )data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c316b40f4.hpp:492: note: by 'ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model::write_array'

492 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c316b40f4.hpp:492: note: by 'ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model_namespace::ulam_cmdstanr_78180b32ba80da6bc9c4f0f8342b99b3_model::write_array'

492 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 finished in 1.4 seconds.

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 1.5 seconds.

Chain 2 finished in 1.5 seconds.

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 finished in 1.6 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.5 seconds.

Total execution time: 1.7 seconds.

.5 <- rethinking:: ulam (flist = alist (~ dbinom (1 , p),logit (p) <- a[actor],~ dnorm (0 , 1.5 )data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model_namespace::ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c7c6a7199.hpp:432: note: by 'ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model_namespace::ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model::write_array'

432 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model_namespace::ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c7c6a7199.hpp:432: note: by 'ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model_namespace::ulam_cmdstanr_3db5f73fee2854c436e38eab75da6b9b_model::write_array'

432 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 finished in 1.3 seconds.

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 finished in 1.3 seconds.

Chain 3 finished in 1.3 seconds.

Chain 4 finished in 1.3 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.3 seconds.

Total execution time: 1.4 seconds.

Now we’ll compare the models using PSIS.

:: compare (.1 , m11.3 , m11.4 ,sort = "PSIS" , func = PSIS

PSIS SE dPSIS dSE pPSIS weight

m11.4 532.4716 18.976515 0.0000 NA 8.593902 1.000000e+00

m11.3 682.5700 9.216209 150.0984 18.46056 3.698773 2.550081e-33

m11.1 688.0192 7.273776 155.5476 19.04362 1.037109 1.672162e-34

From the PSIS comparison, we can see that m11.4, which includes actor effects and treatment effects, is the best model (according to PSIS). That means that the actor effects are actually much more important than the treatment effects, because m11.3 includes treatment effects and is not noticebly different from the model that only includes an overall intercept.

I also fit my own new model, m11.5, which only includes an actor effect and not a treatment effect. Let’s compare that to the full model, m11.4.

:: compare (.4 , m11.5 ,sort = "PSIS" , func = PSIS

PSIS SE dPSIS dSE pPSIS weight

m11.4 532.4716 18.97652 0.000000 NA 8.593902 0.98673682

m11.5 541.0905 17.90472 8.618824 6.483193 5.736835 0.01326318

The two models are essentially indistinguishable by PSIS, which sort of implies that knowing the treatment group doesn’t give us any predictive power. I wonder if this would be different if we split the treatment group up into its two constituent effects.

$ condition <- d$ condition + 1 $ prosoc_left <- d$ prosoc_left + 1 .6 <- rethinking:: ulam (flist = alist (~ dbinom (1 , p),logit (p) <- a[actor] + b[condition],~ dnorm (0 , 1.5 ),~ dnorm (0 , 1.5 )data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model_namespace::ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c71be64c5.hpp:508: note: by 'ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model_namespace::ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model::write_array'

508 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model_namespace::ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c71be64c5.hpp:508: note: by 'ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model_namespace::ulam_cmdstanr_3edff36c47e534dceb57f0a0e4b40178_model::write_array'

508 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 1.9 seconds.

Chain 2 finished in 1.9 seconds.

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 finished in 2.0 seconds.

Chain 4 finished in 2.0 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.9 seconds.

Total execution time: 2.1 seconds.

.7 <- rethinking:: ulam (flist = alist (~ dbinom (1 , p),logit (p) <- a[actor] + b[prosoc_left],~ dnorm (0 , 1.5 ),~ dnorm (0 , 1.5 )data = dat_list,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model_namespace::ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c2285bf.hpp:508: note: by 'ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model_namespace::ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model::write_array'

508 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model_namespace::ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c2285bf.hpp:508: note: by 'ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model_namespace::ulam_cmdstanr_790799fad754433849bd02c357aa0c90_model::write_array'

508 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 finished in 1.9 seconds.

Chain 4 finished in 1.9 seconds.

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 2.0 seconds.

Chain 2 finished in 2.0 seconds.

All 4 chains finished successfully.

Mean chain execution time: 1.9 seconds.

Total execution time: 2.1 seconds.

:: compare (.4 , m11.5 , m11.6 , m11.7 ,sort = "PSIS" , func = PSIS

PSIS SE dPSIS dSE pPSIS weight

m11.7 530.2222 19.05404 0.00000 NA 6.875426 0.750215500

m11.4 532.4716 18.97652 2.24947 2.637975 8.593902 0.243623869

m11.5 541.0905 17.90472 10.86829 7.175685 5.736835 0.003274658

m11.6 541.3432 18.36097 11.12100 7.594034 6.816366 0.002885973

While all four of the models are basically indistinguishable in terms of PSIS, the fact that m11.7 had the best PSIS and m11.7 and m11.4 were slightly better than m11.6 and m11.5, this could suggest that the prosoc_left variable is slightly more important than the condition variable.

11H2

For this problem, we’ll model salmon pirating attempts by bald eagles in Washington state. First we need to set up the data.

data (eagles, package = "MASS" )<- list (y = eagles$ y,n = eagles$ n,P = ifelse (eagles$ P == "S" , 0 , 1 ),A = ifelse (eagles$ A == "I" , 0 , 1 ),V = ifelse (eagles$ V == "S" , 0 , 1 )

First we’ll build a model that considers the three different predictors independently in an aggregated binomial framework. We want to fit this model with both quap and ulam and see if there are any major differences.

<- rethinking:: ulam (alist (~ dbinom (n, p),logit (p) <- a + bp * P + ba * A + bv * V,~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 )data = eagle_data,chains = 4 , cores = 4

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model_namespace::ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c66dc7c79.hpp:445: note: by 'ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model_namespace::ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model::write_array'

445 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model_namespace::ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c66dc7c79.hpp:445: note: by 'ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model_namespace::ulam_cmdstanr_dc511ed7ea10c13f17266b49a99b8d99_model::write_array'

445 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 0.1 seconds.

Chain 2 finished in 0.1 seconds.

Chain 3 finished in 0.1 seconds.

Chain 4 finished in 0.1 seconds.

All 4 chains finished successfully.

Mean chain execution time: 0.1 seconds.

Total execution time: 0.3 seconds.

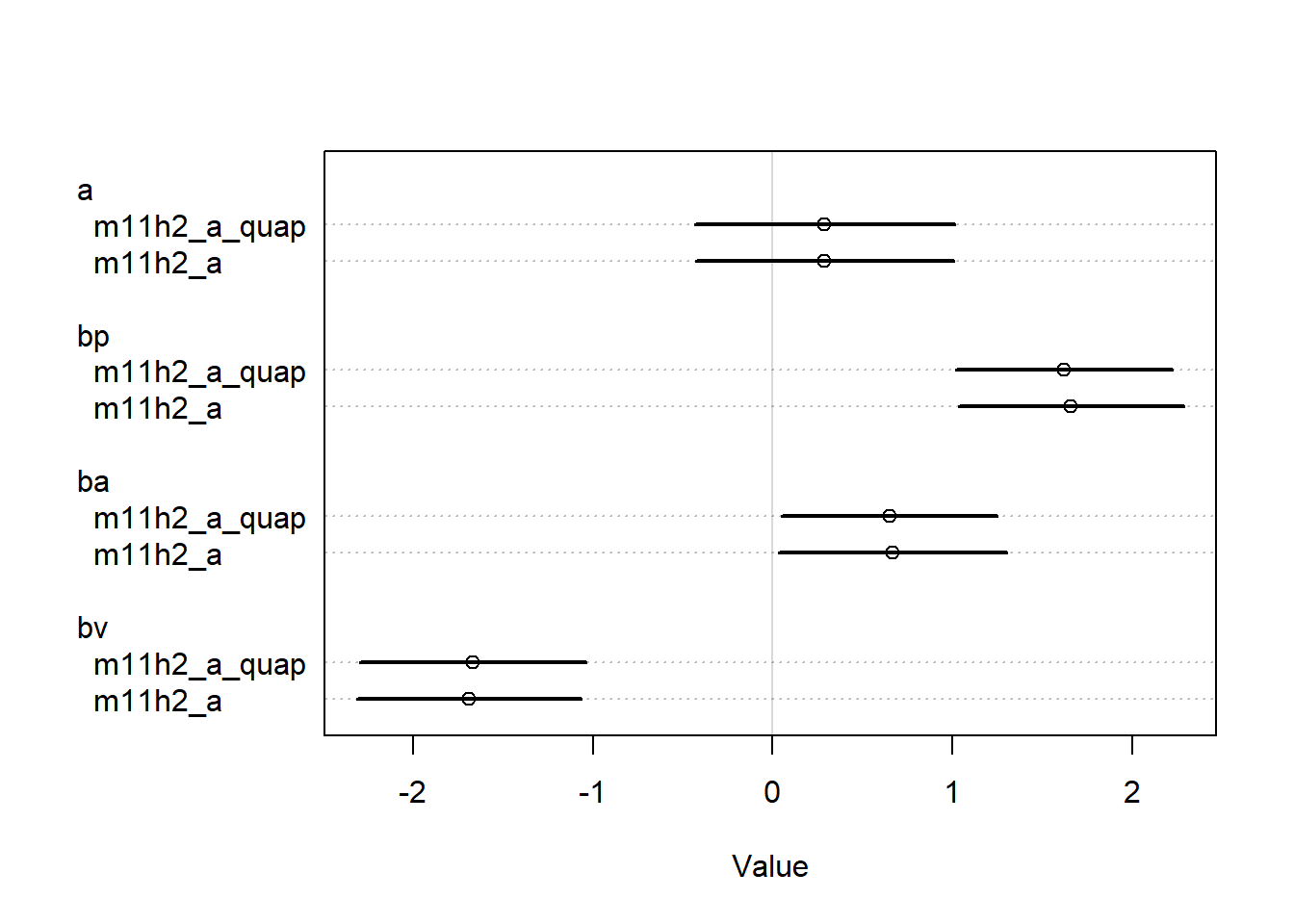

<- rethinking:: quap (alist (~ dbinom (n, p),logit (p) <- a + bp * P + ba * A + bv * V,~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 )data = eagle_datalayout (1 ):: coeftab (m11h2_a, m11h2_a_quap) |> rethinking:: coeftab_plot ()

We can see that the quap estimates are identical, so we’ll use the quap estimates to get our predictions, I guess.

Now we need to intercept the estimates. We can first take a look at the coefficients.

:: precis (m11h2_a_quap)

mean sd 5.5% 94.5%

a 0.2936490 0.3681379 -0.2947064 0.8820044

bp 1.6211363 0.3063651 1.1315057 2.1107669

ba 0.6516624 0.3054238 0.1635362 1.1397886

bv -1.6731447 0.3191361 -2.1831858 -1.1631036

Wee see that the effects of \(P\) and \(A\) both seem to have their entire probability density at positive values, while the coefficient for \(V\) has a negative effect. However, these coefficients don’t tell us that much – we can exponentiate them to get some odds ratios, though.

:: precis (m11h2_a_quap, pars = c ("bp" , "ba" , "bv" )) |> unclass () |> as.data.frame () |> :: select (- sd) |> :: mutate (dplyr:: across (dplyr:: everything (), \(x) round (exp (x), 2 ))) |> ` rownames<- ` (c ("bp" , "ba" , "bv" ))

mean X5.5. X94.5.

bp 5.06 3.10 8.25

ba 1.92 1.18 3.13

bv 0.19 0.11 0.31

This gives us a bit more understandable estimate of the effect, but we really want to look at the predicted probabilities and success counts to really understand what is going on.

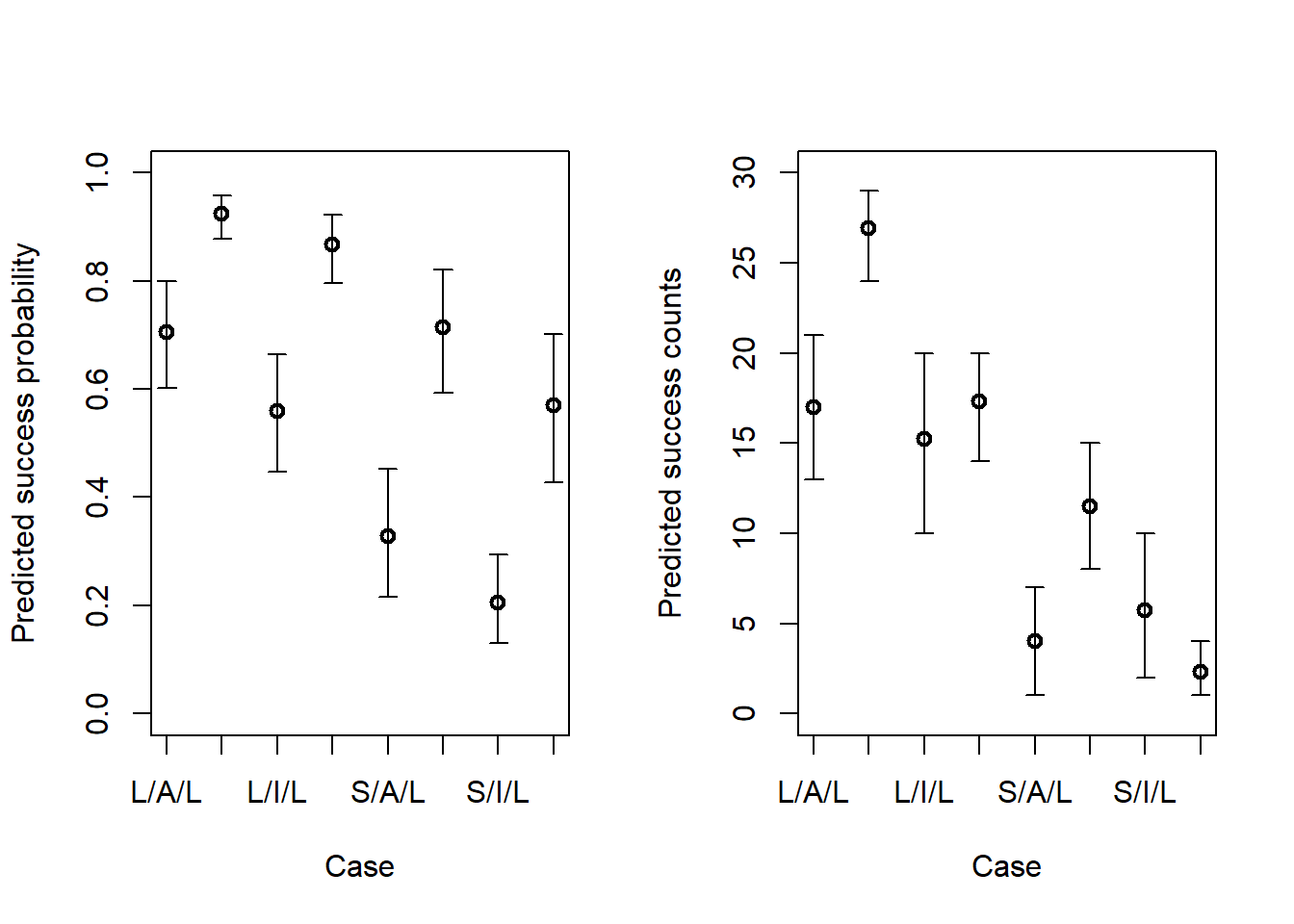

Code

# Predicted probabilities <- rethinking:: link (m11h2_a_quap)<- apply (pred_probs, 2 , mean)<- apply (pred_probs, 2 , rethinking:: PI)# Predicted success counts <- rethinking:: sim (m11h2_a_quap)<- apply (pred_counts, 2 , mean)<- apply (pred_counts, 2 , rethinking:: PI)# Making plots <- paste (ifelse (eagle_data$ P == 0 , "S" , "L" ),ifelse (eagle_data$ A == 0 , "I" , "A" ),ifelse (eagle_data$ V == 0 , "S" , "L" ),sep = "/" layout (matrix (1 : 2 , nrow = 1 ))plot (1 : 8 , pred_prob_mean,xlab = "Case" ,ylab = "Predicted success probability" ,lwd = 2 ,xlim = c (1 , 8 ),ylim = c (0 , 1 ),xaxt = "n" axis (1 , at = 1 : 8 , labels = labs)arrows (x0 = 1 : 8 ,y0 = pred_prob_pi[1 , ],x = 1 : 8 ,y = pred_prob_pi[2 , ],length = 0.05 ,angle = 90 ,code = 3 plot (1 : 8 , pred_count_mean,xlab = "Case" ,ylab = "Predicted success counts" ,lwd = 2 ,xlim = c (1 , 8 ),ylim = c (0 , 30 ),xaxt = "n" axis (1 , at = 1 : 8 , labels = labs)arrows (x0 = 1 : 8 ,y0 = pred_count_pi[1 , ],x = 1 : 8 ,y = pred_count_pi[2 , ],length = 0.05 ,angle = 90 ,code = 3

In general, trying to understand three categorical effects simultaneously is a bit tough. But the cases on the x axis are three letters representing the size of the pirate, the age of the pirate, and the size of the victim respectively. We can see that, in general, pirating from a large bird is much less successful than pirating from a small bird, across all categories of pirate age and size. In general, it also looks like large, adult pirates are the most successful overall.

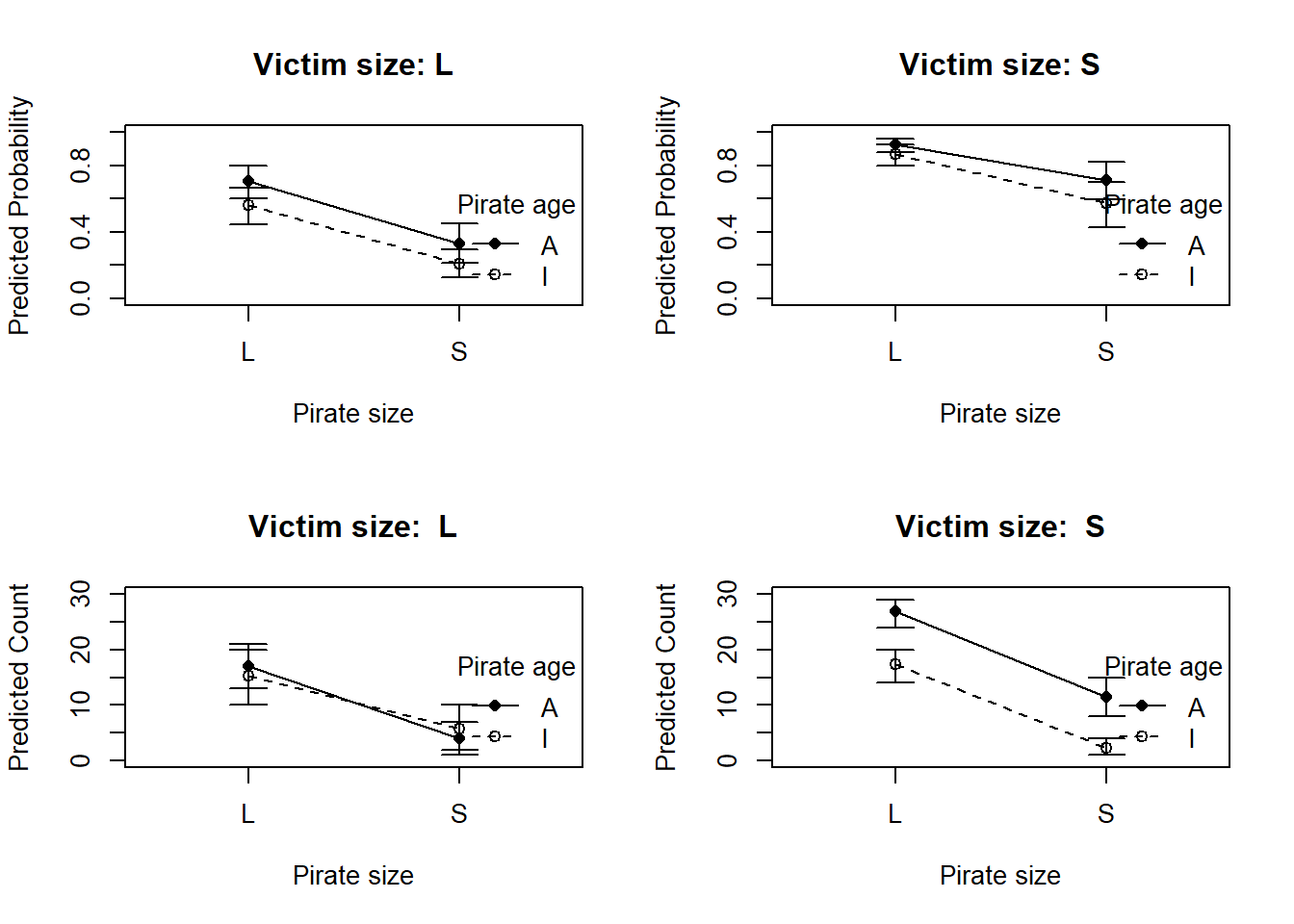

We can also plot this in a different way using a traditional interaction plot.

Code

<- eagles |> :: mutate (pred_prob_mean = pred_prob_mean,pred_prob_lwr = pred_prob_pi[1 , ],pred_prob_upr = pred_prob_pi[2 , ],pred_count_mean = pred_count_mean,pred_count_lwr = pred_count_pi[1 , ],pred_count_upr = pred_count_pi[2 , ]layout (matrix (1 : 4 , nrow = 2 , byrow = TRUE ))# Set up plotting layout: one plot per level of V <- unique (edp$ V)# Define symbols and line types for A <- unique (edp$ A)<- c ("A" = 16 , "I" = 1 )<- c ("A" = 1 , "I" = 2 )for (v in V_levels) {<- edp[edp$ V == v, ]plot (NA , xlim = c (0.5 , 2.5 ), ylim = c (0 , 1 ), xaxt = "n" ,xlab = "Pirate size" , ylab = "Predicted Probability" , main = paste ("Victim size:" , v))axis (1 , at = 1 : 2 , labels = c ("L" , "S" ))for (a in A_levels) {<- sub[sub$ A == a, ]<- ifelse (dat$ P == "L" , 1 , 2 )# CI bars arrows (xvals, dat$ pred_prob_lwr, xvals, dat$ pred_prob_upr, angle = 90 , code = 3 , length = 0.1 , col = "black" )# Mean points and lines lines (xvals, dat$ pred_prob_mean, lty = lty_vals[a], col = "black" )points (xvals, dat$ pred_prob_mean, pch = pch_vals[a], col = "black" , bg = "black" )legend ("bottomright" , legend = A_levels,pch = pch_vals, lty = lty_vals, title = "Pirate age" , bty = "n" )# Set up plotting layout: one plot per level of V <- unique (edp$ V)# Define symbols and line types for A <- unique (edp$ A)<- c ("A" = 16 , "I" = 1 )<- c ("A" = 1 , "I" = 2 )for (v in V_levels) {<- edp[edp$ V == v, ]plot (NA , xlim = c (0.5 , 2.5 ), ylim = c (0 , 30 ), xaxt = "n" ,xlab = "Pirate size" , ylab = "Predicted Count" , main = paste ("Victim size: " , v))axis (1 , at = 1 : 2 , labels = c ("L" , "S" ))for (a in A_levels) {<- sub[sub$ A == a, ]<- ifelse (dat$ P == "L" , 1 , 2 )# CI bars arrows (xvals, dat$ pred_count_lwr, xvals, dat$ pred_count_upr, angle = 90 , code = 3 , length = 0.1 , col = "black" )# Mean points and lines lines (xvals, dat$ pred_count_mean, lty = lty_vals[a], col = "black" )points (xvals, dat$ pred_count_mean, pch = pch_vals[a], col = "black" , bg = "black" )legend ("bottomright" , legend = A_levels,pch = pch_vals, lty = lty_vals, title = "Pirate age" , bty = "n" )

Since we already saw that the affect of victim size was important, I made that variable the faceting variable here to see if we can visually see any interactions between pirate age and size, since we’ll look at that in the next problem. The CI’s overlap a lot, but in general it seems adult pirates are generally better, but pirate size appears to be more important in determining overall success.

In a real analysis, we should also look at the marginal effects of each predictor (how much we expect success probability or count to increase if we change one variable at a time, which is NOT estimated by the odds ratio due to the nonlinear link function), but we haven’t really discussed that at this point in the book so I’ll ignore it.

Next we’ll fit a model with an iteraction between pirate size and age, and compare the models with WAIC. Just for fun, I also decided to compare the models with all two-way interactions, and a model that also has a three-way interaction. But first we’ll answer the actual question.

<- rethinking:: quap (alist (~ dbinom (n, p),logit (p) <- a + bp * P + ba * A + bv * V + gpa * P * A,~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.25 )data = eagle_data<- rethinking:: quap (alist (~ dbinom (n, p),logit (p) <- a + bp * P + ba * A + bv * V + * P * A + gpv * P * V + gav * A * V,~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.25 ),~ dnorm (0 , 0.25 ),~ dnorm (0 , 0.25 )data = eagle_data<- rethinking:: quap (alist (~ dbinom (n, p),logit (p) <- a + bp * P + ba * A + bv * V + * P * A + gpv * P * V + gav * A * V + * P * A * V,~ dnorm (0 , 1.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.5 ),~ dnorm (0 , 0.25 ),~ dnorm (0 , 0.25 ),~ dnorm (0 , 0.25 ),~ dnorm (0 , 0.125 )data = eagle_data

So like I said, first we’ll answer the actual question.

:: compare (m11h2_a_quap, m11h2_c)

WAIC SE dWAIC dSE pWAIC weight

m11h2_a_quap 59.21206 11.29599 0.0000000 NA 8.235358 0.5142964

m11h2_c 59.32646 11.41087 0.1144025 0.8295544 8.269835 0.4857036

The two models are basically equivalent in WAIC, indicating that pirate age and pirate size do not seem to interact. Probably large birds are always more likely to succeed and small birds are always more likely to fail, regardless of whether they are adults, and any learned experience in how to pirate is independent of the bird’s size.

If we compare all of the models with interaction terms, we can see that in general none of the interaction terms make the model do better than the original model with no interactions. I wonder if this is mostly because we have small counts of some combinations – in general, we were much less likely to observe small birds acting as pirates in the first place, and we were less likely to observe successes across all of the small pirate groups.

:: compare (m11h2_a_quap, m11h2_c, m11h2_d, m11h2_e)

WAIC SE dWAIC dSE pWAIC weight

m11h2_a_quap 58.82845 11.48208 0.0000000 NA 7.870400 0.3574550

m11h2_e 59.50629 11.44157 0.6778414 1.1042359 8.791410 0.2547006

m11h2_c 59.80259 11.86056 0.9741382 0.8174611 8.423275 0.2196291

m11h2_d 60.33598 12.38814 1.5075296 1.4153978 9.062191 0.1682153

11H3

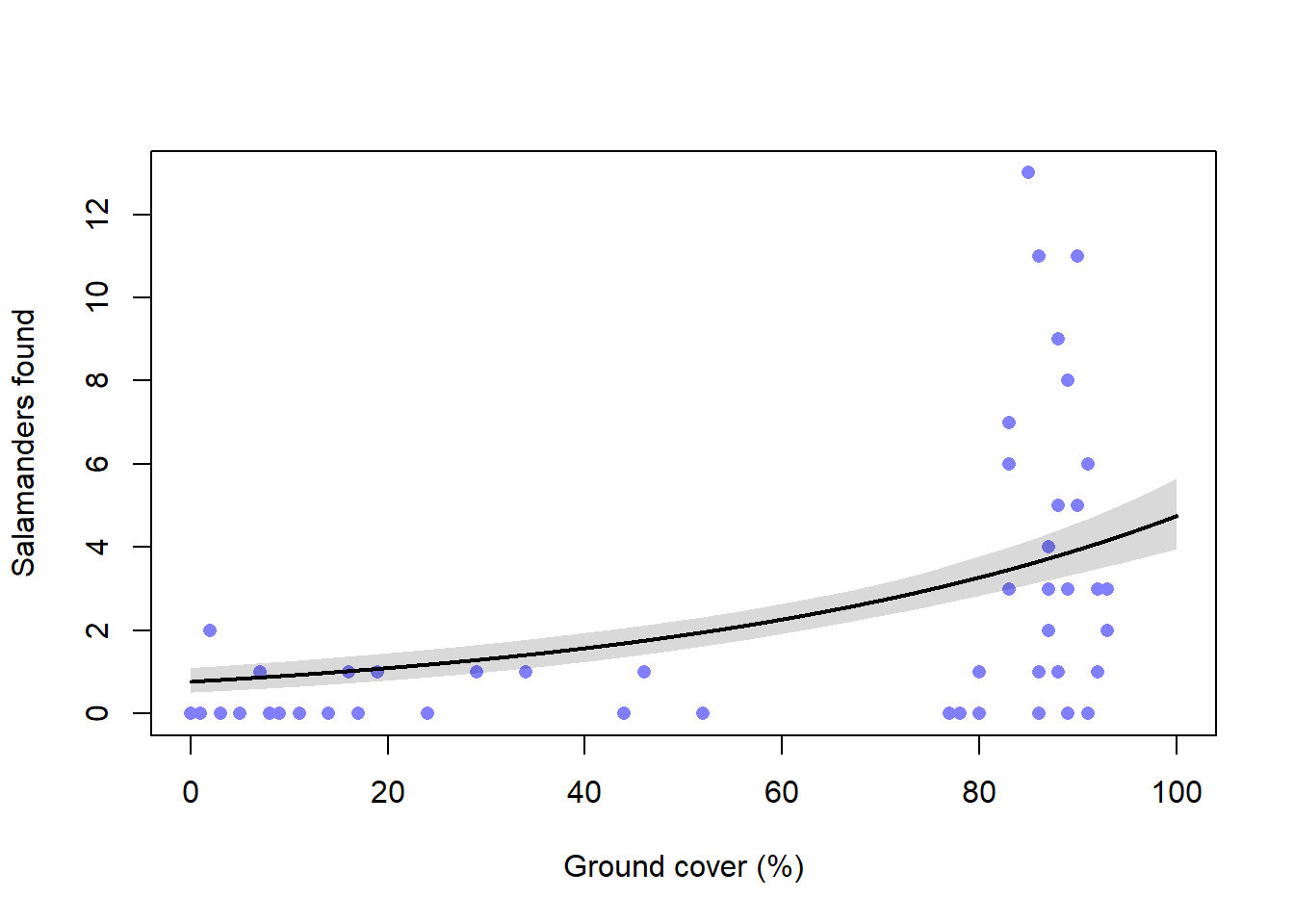

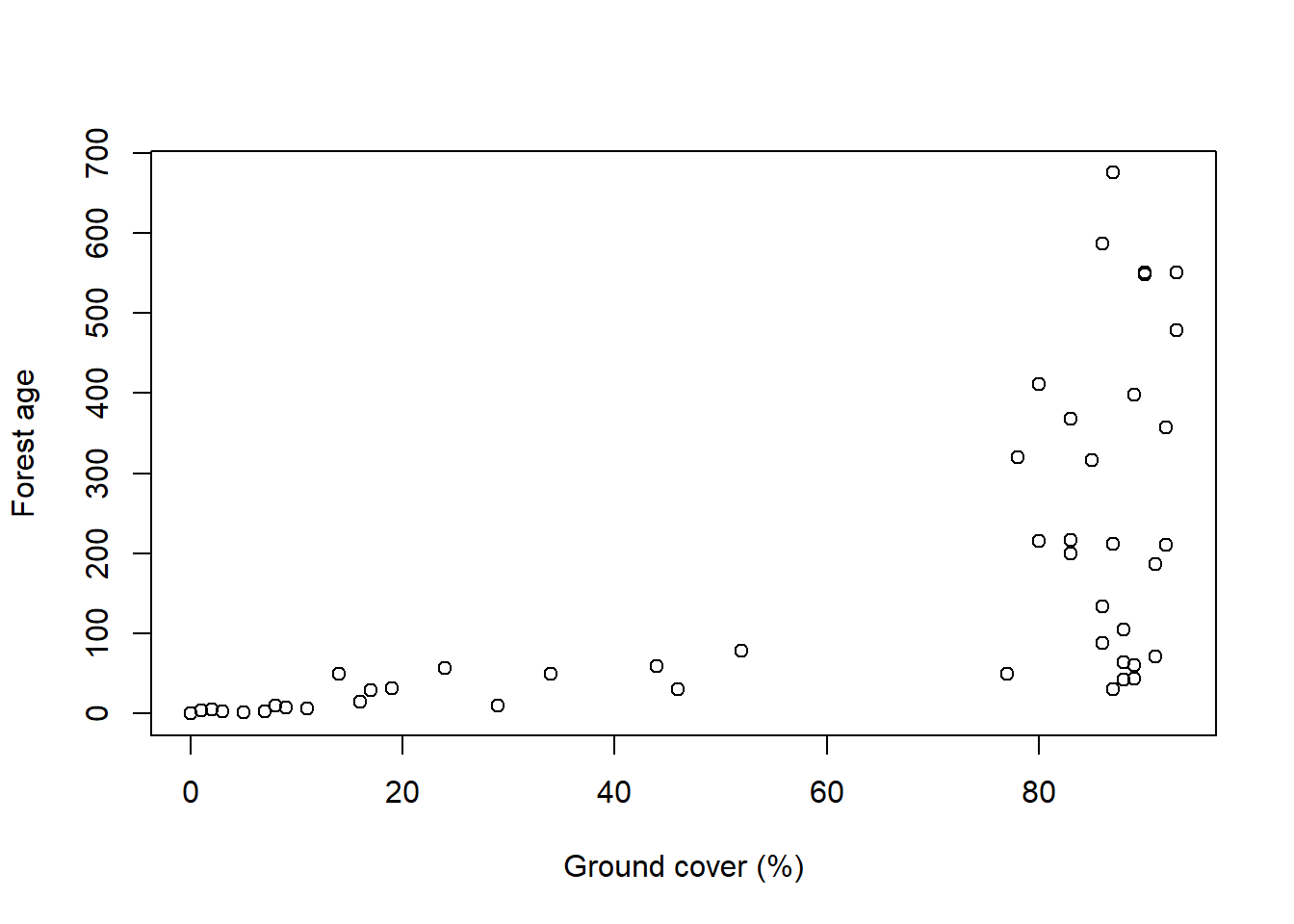

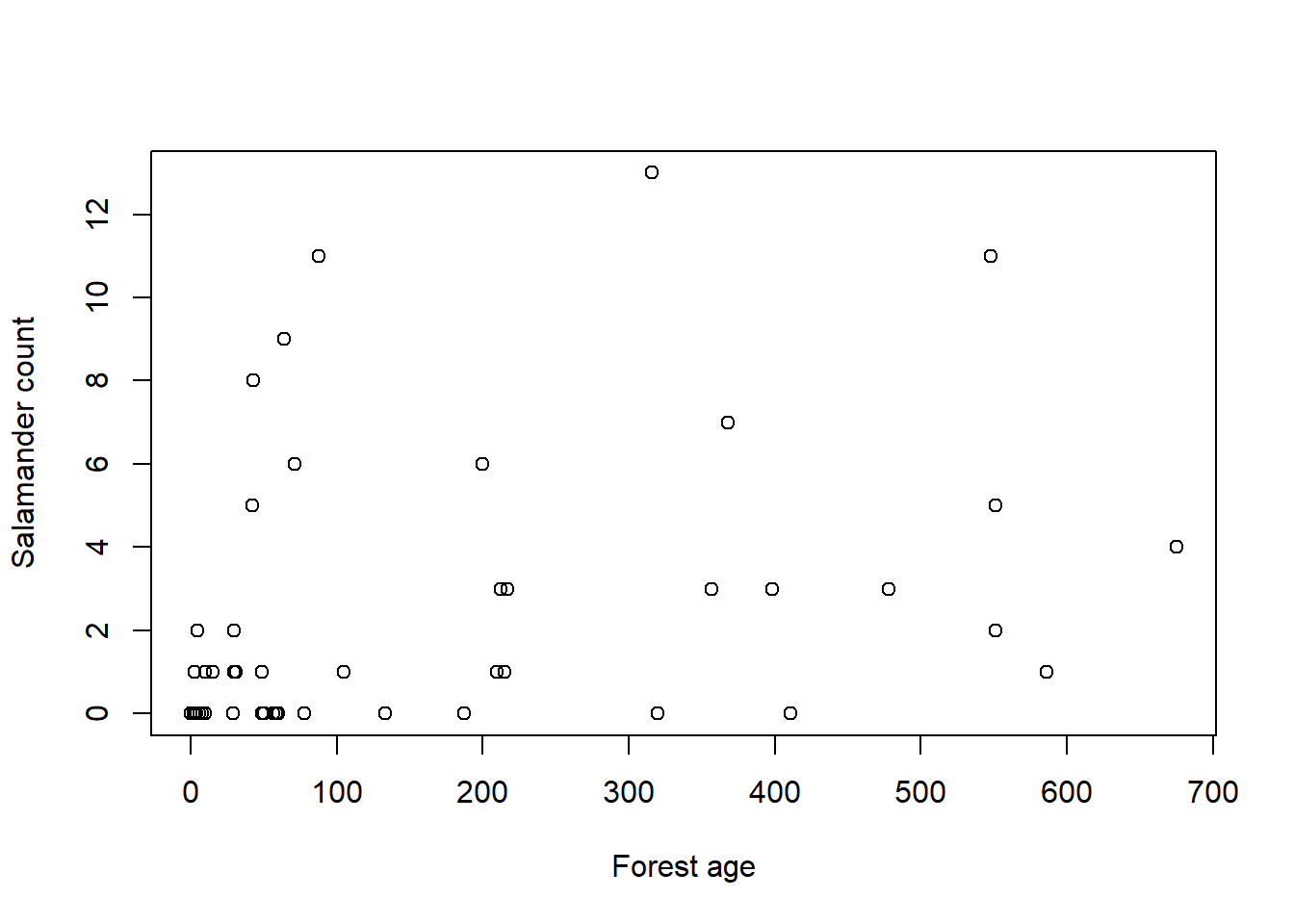

For this problem, we’ll build a model of salamander density, so we better start by cleaning up the salamander data. I kept getting really shitty models until I realized I needed to standardize the predictors because the scale is so different from the count scale of the outcome. But once I did that, I got models that worked alright.

data ("salamanders" , package = "rethinking" )<- list (n = salamanders$ SALAMAN,gc = standardize (salamanders$ PCTCOVER),fa = standardize (salamanders$ FORESTAGE)

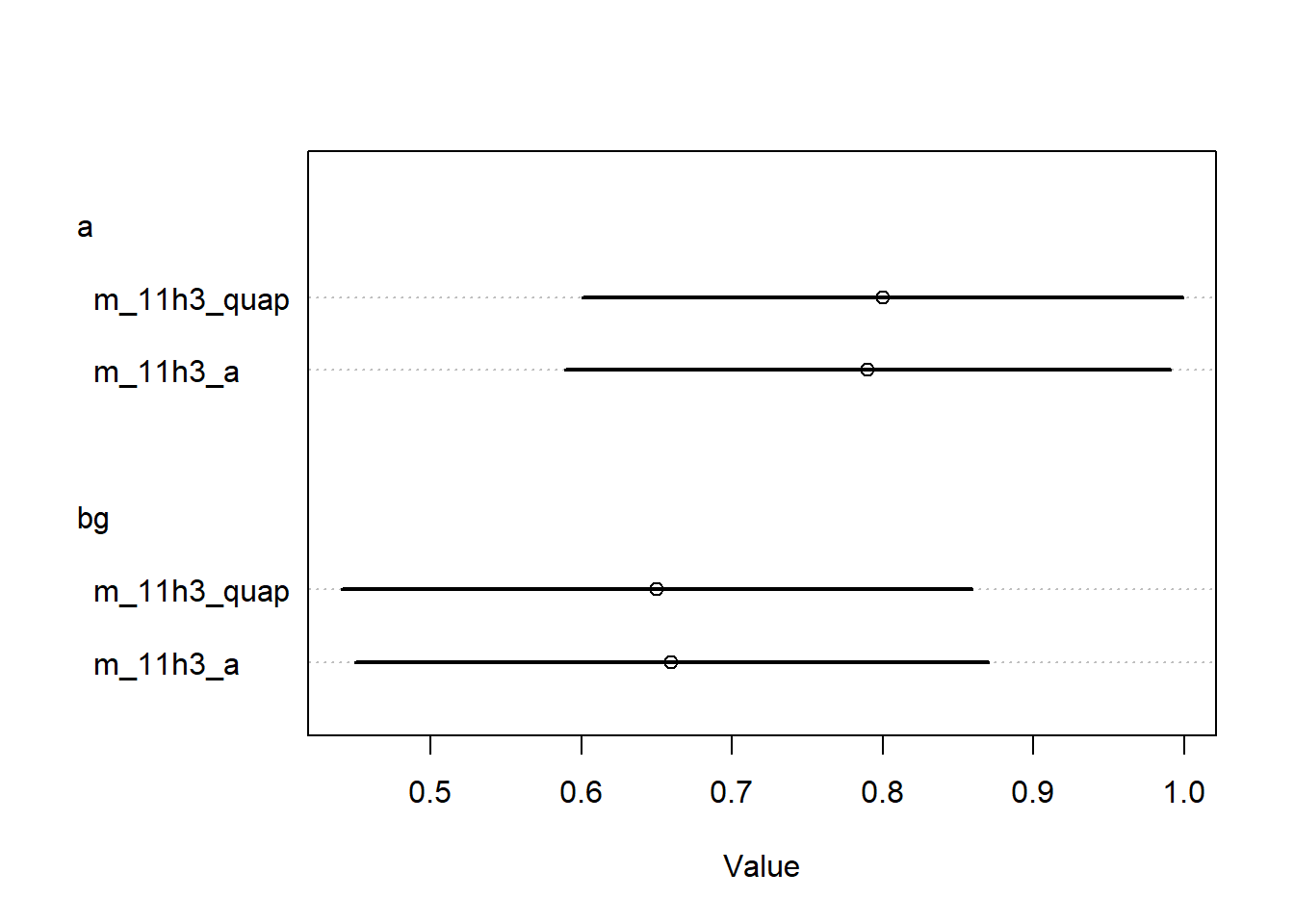

First we’ll build a model relating salamander density to percent cover using a Poisson model. Again we need to compare quap and ulam.

<- rethinking:: ulam (alist (~ dpois (lambda),log (lambda) <- a + bg * gc,~ dnorm (3 , 0.5 ),~ dnorm (0 , 0.2 )data = s_dat,chains = 4 , cores = 4 ,log_lik = TRUE

In file included from stan/src/stan/model/model_header.hpp:5:

stan/src/stan/model/model_base_crtp.hpp:205:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, std::vector<double>&, std::vector<int>&, std::vector<double>&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model_namespace::ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

205 | void write_array(stan::rng_t& rng, std::vector<double>& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c2f397abf.hpp:460: note: by 'ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model_namespace::ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model::write_array'

460 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

stan/src/stan/model/model_base_crtp.hpp:136:8: warning: 'void stan::model::model_base_crtp<M>::write_array(stan::rng_t&, Eigen::VectorXd&, Eigen::VectorXd&, bool, bool, std::ostream*) const [with M = ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model_namespace::ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model; stan::rng_t = boost::random::mixmax_engine<17, 36, 0>; Eigen::VectorXd = Eigen::Matrix<double, -1, 1>; std::ostream = std::basic_ostream<char>]' was hidden [-Woverloaded-virtual=]

136 | void write_array(stan::rng_t& rng, Eigen::VectorXd& theta,

| ^~~~~~~~~~~

C:/Users/Zane/AppData/Local/Temp/RtmpSCWF6a/model-305c2f397abf.hpp:460: note: by 'ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model_namespace::ulam_cmdstanr_55fa12cdc55a5ce3c1c627d810695ba0_model::write_array'

460 | write_array(RNG& base_rng, std::vector<double>& params_r, std::vector<int>&

Running MCMC with 4 parallel chains, with 1 thread(s) per chain...

Chain 1 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 1 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 1 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 1 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 1 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 1 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 1 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 1 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 1 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 2 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 2 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 2 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 2 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 2 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 2 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 2 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 2 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 2 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 2 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 3 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 3 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 3 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 3 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 3 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 3 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 3 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 3 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 3 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 3 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 3 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 1 / 1000 [ 0%] (Warmup)

Chain 4 Iteration: 100 / 1000 [ 10%] (Warmup)

Chain 4 Iteration: 200 / 1000 [ 20%] (Warmup)

Chain 4 Iteration: 300 / 1000 [ 30%] (Warmup)

Chain 4 Iteration: 400 / 1000 [ 40%] (Warmup)

Chain 4 Iteration: 500 / 1000 [ 50%] (Warmup)

Chain 4 Iteration: 501 / 1000 [ 50%] (Sampling)

Chain 4 Iteration: 600 / 1000 [ 60%] (Sampling)

Chain 1 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 1 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 1 finished in 0.1 seconds.

Chain 2 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 2 finished in 0.1 seconds.

Chain 3 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 3 finished in 0.1 seconds.

Chain 4 Iteration: 700 / 1000 [ 70%] (Sampling)

Chain 4 Iteration: 800 / 1000 [ 80%] (Sampling)

Chain 4 Iteration: 900 / 1000 [ 90%] (Sampling)

Chain 4 Iteration: 1000 / 1000 [100%] (Sampling)

Chain 4 finished in 0.1 seconds.

All 4 chains finished successfully.

Mean chain execution time: 0.1 seconds.

Total execution time: 0.3 seconds.

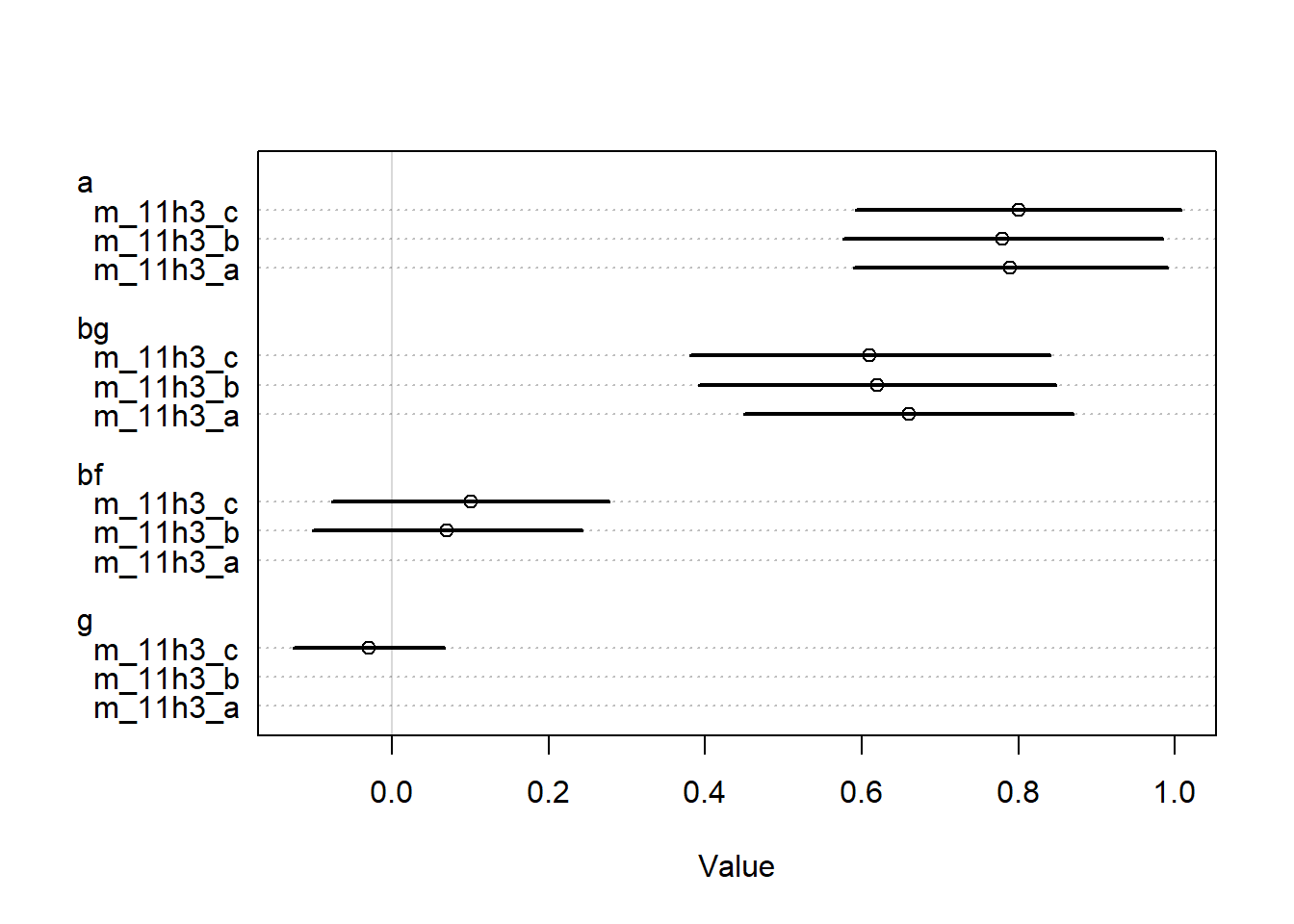

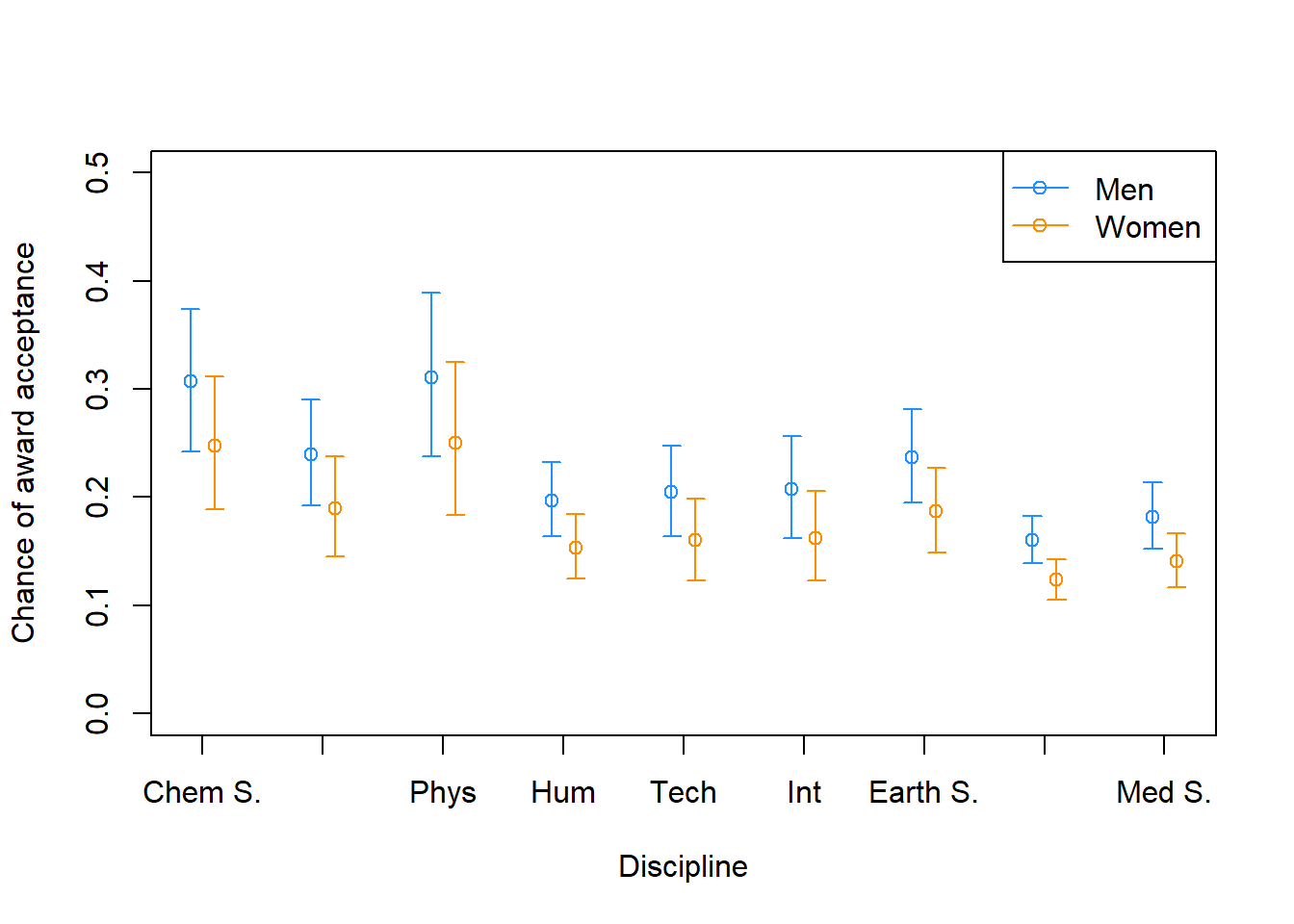

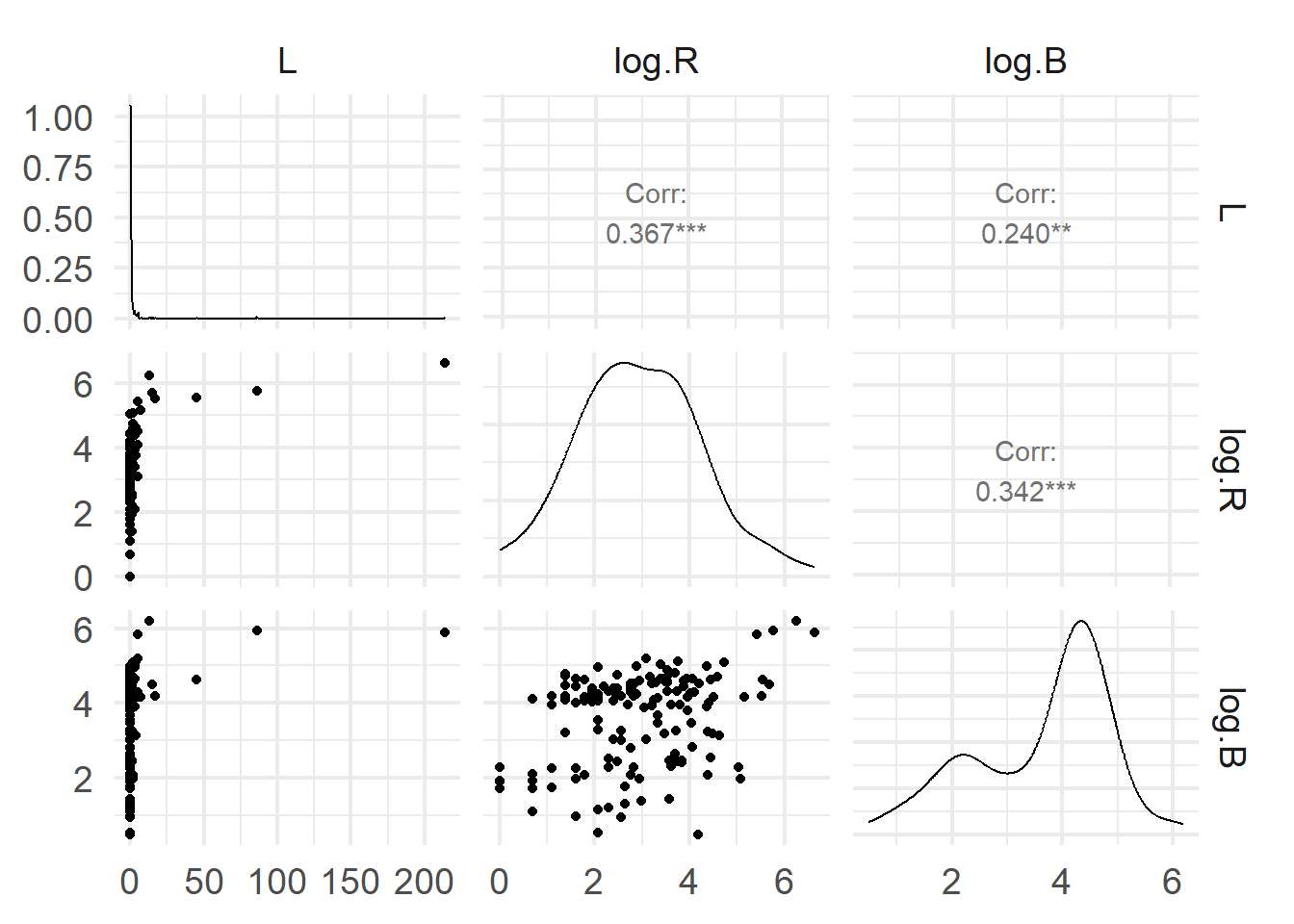

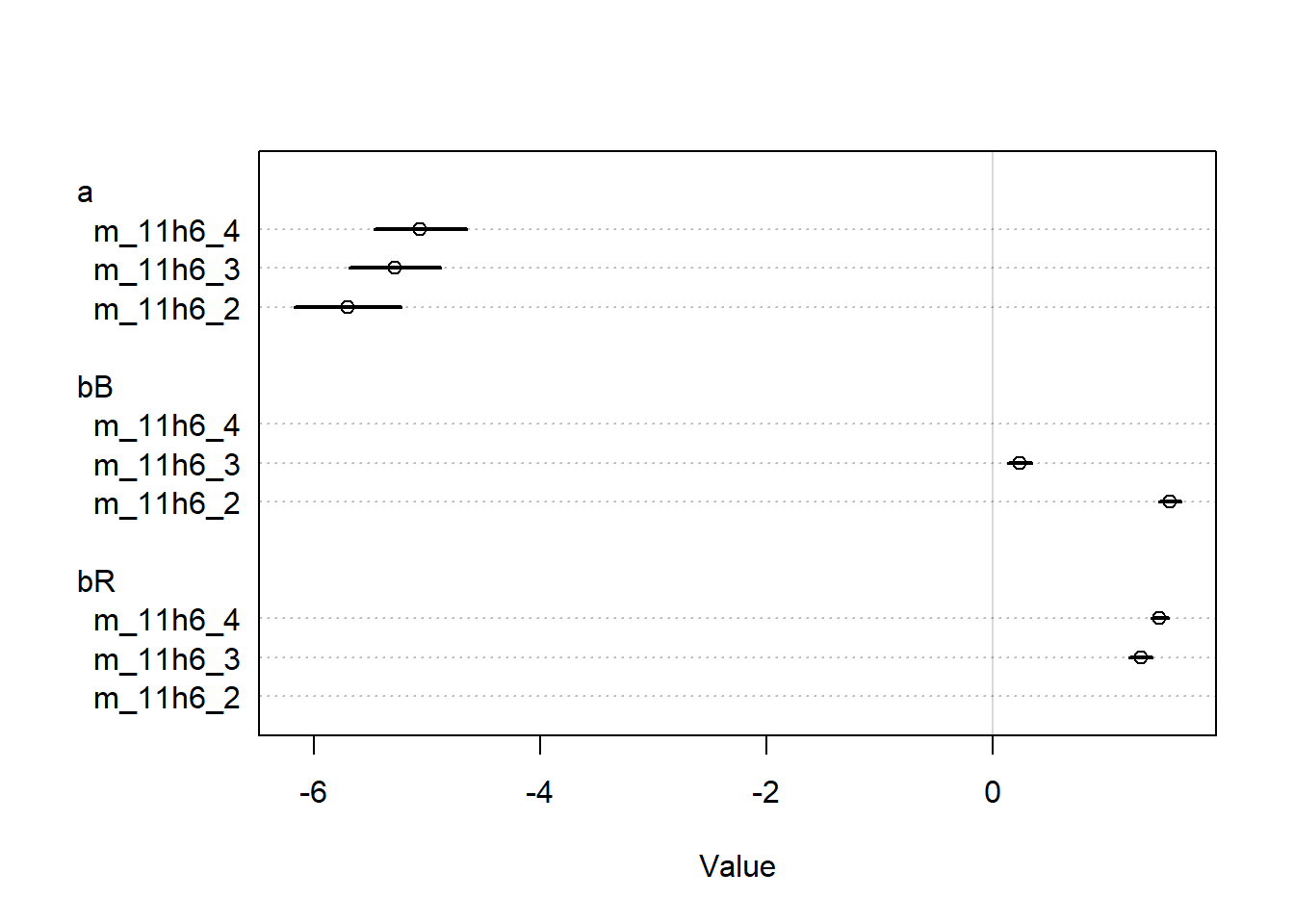

<- rethinking:: quap (alist (~ dpois (lambda),log (lambda) <- a + bg * gc,~ dnorm (3 , 0.5 ),~ dnorm (0 , 0.2 )data = s_datlayout (1 ):: coeftab (m_11h3_a, m_11h3_quap) |> rethinking:: coeftab_plot ()